SuperX has unveiled the SuperX XN9160-B200 AI Server, its latest flagship product. This next-generation AI server is designed to meet the increasing demand for scalable, high-performance computing in AI training, machine learning (ML), and high-performance computing (HPC) tasks. It is equipped with NVIDIA’s Blackwell architecture GPU (B200).

The XN9160-B200 AI Server accelerates large-scale distributed AI training and inference workloads. It is optimized for GPU-supported tasks, including training and inferring foundation models using reinforcement learning (RL) and distillation techniques, multimodal model training and inference, and HPC applications like climate modeling, drug discovery, seismic analysis, and insurance risk modeling. Its performance rivals that of a conventional supercomputer, offering enterprise-level capabilities in a compact form.

The SuperX XN9160-B200 AI server, delivering powerful GPU instances and computational capabilities, marks a significant milestone in SuperX’s AI infrastructure strategy.

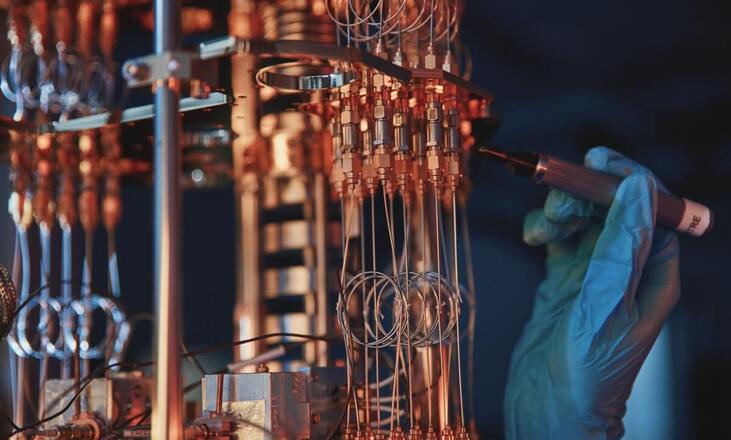

XN9160-B200 AI System

The new XN9160-B200 delivers exceptional AI computing power in a 10U chassis with its 8 NVIDIA Blackwell B200 GPUs, 5th generation NVLink technology, 1440 GB of high-bandwidth memory (HBM3E), and 6th Gen Intel Xeon CPUs.

With eight NVIDIA Blackwell B200 GPUs and fifth-generation NVLink technology, the SuperX XN9160-B200’s core engine achieves ultra-high inter-GPU bandwidth of up to 1.8TB/s. This significantly reduces the R&D cycle for tasks like pre-training and fine-tuning trillion-parameter models and accelerates large-scale AI model training by up to three times. With 1440GB of high-performance HBM3E memory at FP8 accuracy, it delivers an impressive throughput of 58 tokens per second per card on the GPT-MoE 1.8T model, a substantial increase in inference performance. There is a 15x improvement compared to the previous generation H100 platform’s 3.5 tokens per second.

The system is powered by all-flash NVMe storage, 5,600–8,000 MT/s DDR5 memory, and 6th Gen Intel® Xeon® CPUs. These components ensure stable and efficient completion of AI model training and inference tasks, accelerate data pre-processing, maintain seamless operation in high-load virtualization scenarios, and enhance the efficiency of advanced parallel computing.

Powering AI Without Interruption

The XN9160-B200 employs an innovative multi-path power redundancy technology to ensure exceptional operational reliability. With its 1+1 redundant 12V power supplies and 4+4 redundant 54V GPU power supplies, it minimizes the risk of single points of failure and ensures continuous and stable operation even in unforeseen circumstances, providing uninterrupted power for critical AI missions.

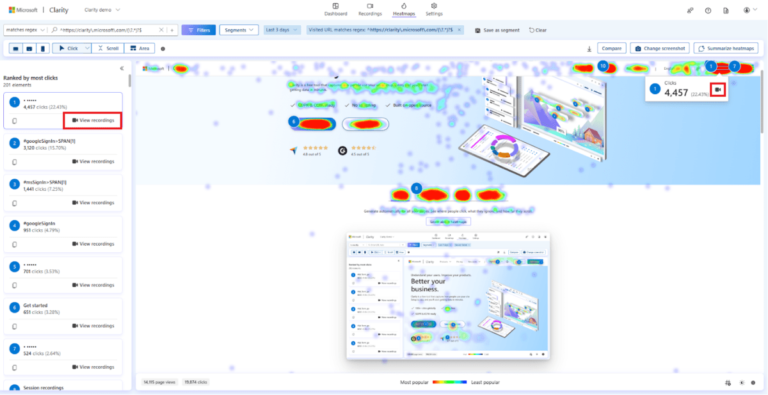

A built-in AST2600 intelligent management system on the SuperX XN9160-B200 enables easy remote monitoring and control. Each server undergoes more than 48 hours of full-load stress testing, cold and hot boot validation, and high/low temperature aging screening to ensure reliable delivery. Additionally, SuperX, a Singapore-based company, offers a full-lifecycle service guarantee, a three-year warranty, and expert technical support to help businesses navigate the AI wave.